Wake : Exploring Co-Located Co-Presence in Virtual Reality

Wake is a Guided Mixed Reality Experience for one participant in-headset, one dancer, and one facilitator. Wake immerses a participant in a virtual environment and uses spoken instructions and movement to facilitate a participant’s navigation of this space, interact with virtual and tangible objects, and encounter a co-located and virtually present dancer who invites the participant to join in simple collaborative movement.

The experience is built in Unity 3D for the HTC Vive, Vive trackers, and an Intel RealSense Depth Camera placed at the position of the participant’s eyes in the headset. The project uses a custom algorithm to stream and render in real-time a high resolution volumetric video (synced depth + RGB feeds) of a physically and virtually co-present dancer.

Created in collaboration with the multi-disciplinary performance duo, slowdanger (Anna Thompson and Taylor Knight), this work continues our research inquiry into embodied interaction, kinesthetic awareness in mediated experiences, trust and emotion within co-present head-mounted XR experiences, and the shifting boundaries between physical and digital.

Wake Featured by Intel RealSense

A user study (N = 25) was conducted with Wake, concerning embodied interaction, affect, and social presence. The study consisted of semi-structured phenomenological interviews, standardized metrics for self-reported emotion data, and proxemics data measured in real-world units. The findings of this study are presented in my Masters of Science thesis, “We’re in this together: Embodied interaction, affect, and design methods in asymmetric, co-located, co-present mixed reality.”

Role: Artist/Designer, Principal Investigator: lead project development and execution, technical and design direction, design user study, manage research assistants, analyze user study data, collect findings in thesis

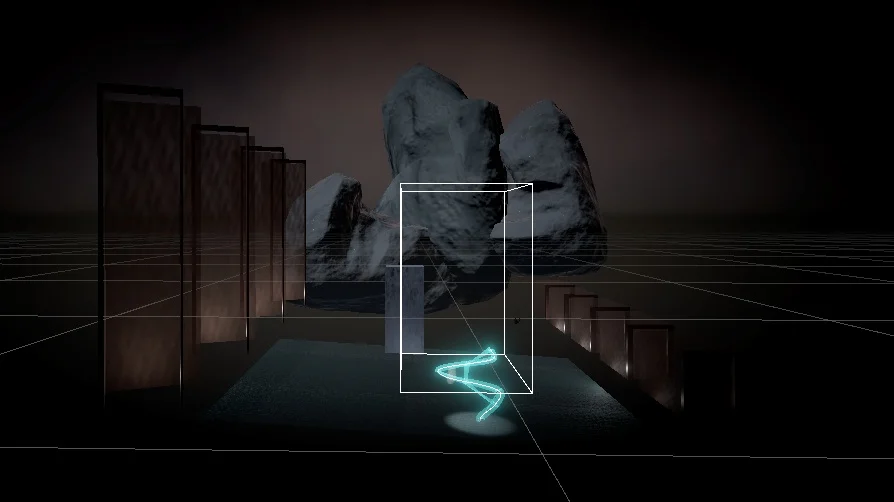

Wake Sketch: User Flow Diagram

User Study

Left: In-VR view

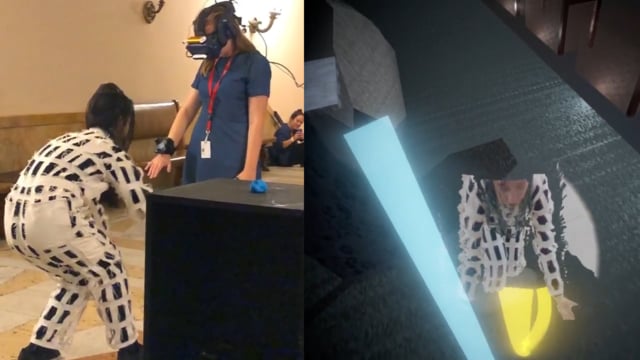

Top Right: Physical Space with Participant and Dancers

Bottom Right: Unity Game Engine

Wake : First Prototypes

Images from Wake Prototype 1 and 2, performed at Carnegie Museum of Art, and Thrival Festival, Humans X Tech event. slowdanger (Anna Thompson and Taylor Knight) performed.

Wake Prototype 1, performed at the Carnegie Museum of Art, September 2018. Ru Emmons performed the dancer’s role.